GPU cache is fast memory that stores data for quick access by the graphics processing unit (GPU). It enhances performance by reducing data latency and speeding up computations.

Understanding GPU cache is essential in maximizing GPU performance. This type of memory is crucial for storing frequently accessed data for rapid retrieval, reducing the need to fetch data from slower system memory. By efficiently utilizing the GPU cache, applications and games can run smoothly with improved responsiveness and faster rendering times.

We will delve deeper into the concept of GPU cache, its importance in GPU performance, how it functions, and how to optimize its usage for better overall system performance. Let’s explore the intricacies of GPU cache and its impact on modern computing.

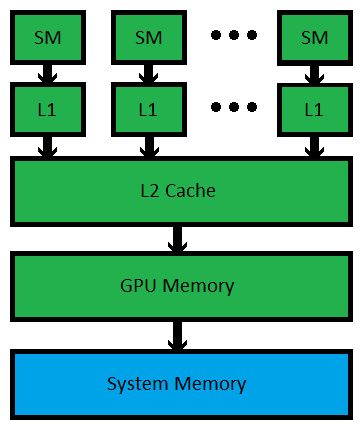

Credit: supercomputingblog.com

What Is Gpu Cache

When it comes to understanding the functioning of a GPU (Graphics Processing Unit), one term that frequently pops up is “GPU cache.” But what exactly is GPU cache and why is it so important? In this article, we’ll delve into the world of GPU cache, exploring its purpose, significance, and impact on overall system performance.

Introduction To Gpu Cache

GPU cache refers to a specialized high-speed memory integrated within the graphics processing unit. It serves as a crucial component in the GPU’s architecture, facilitating quicker access to frequently used data and instructions. By storing this data closer to the processing cores, GPU cache minimizes the need to fetch data from slower system memory or storage, thereby enhancing the overall efficiency and speed of graphical computations.

Importance Of Gpu Cache

The importance of GPU cache cannot be understated. GPU cache plays a fundamental role in optimizing the performance of graphics-intensive applications and workloads. By reducing the latency associated with memory access, the cache enables the GPU to swiftly retrieve essential data, leading to smoother and more responsive visual experiences during gaming, content creation, and other GPU-accelerated tasks. The efficient utilization of GPU cache directly influences the rendering speed, frame rates, and overall responsiveness of graphical applications.

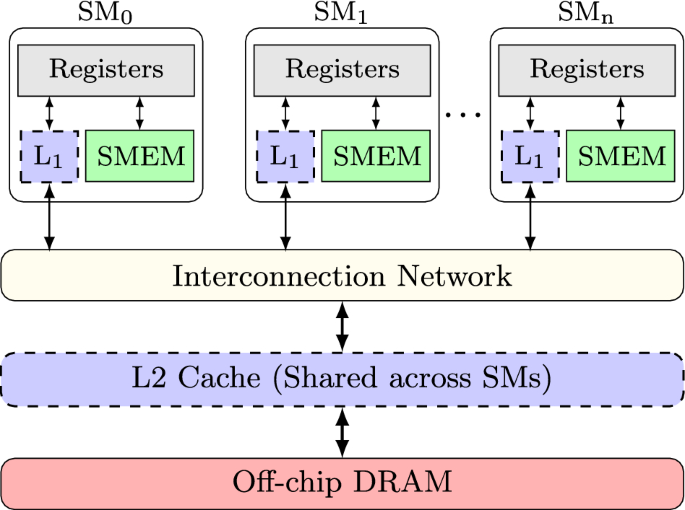

Credit: link.springer.com

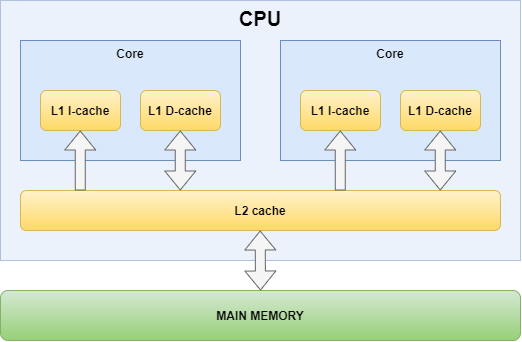

Types Of Gpu Cache

Types of GPU Cache:

L1 Cache

L1 Cache is the fastest cache located directly on the GPU core.

- Small in size

- Provides quick access to frequently used data

L2 Cache

L2 Cache is larger than L1 Cache and is dedicated to specific cores on the GPU.

- Offers more storage than L1 Cache

- Helps in reducing memory latency

Shared Cache

Shared Cache is a cache shared among multiple cores on the GPU.

- Enhances data sharing between cores

- Improves overall GPU performance

Working Mechanism Of Gpu Cache

The GPU cache is a high-speed memory component that stores frequently accessed data, allowing the GPU to swiftly retrieve information without accessing the main memory. This mechanism significantly enhances the overall performance and responsiveness of the GPU during intensive computing tasks, such as gaming, video editing, and 3D rendering.

Cache Hierarchy

Cache Coherency

The GPU cache is a vital component that stores frequently accessed data to improve processing speed.

Cache Hierarchy

GPU cache follows a hierarchy with different levels, such as L1, L2, and sometimes L3 cache, based on proximity to the processor.

- L1 Cache: Fastest cache directly connected to GPU cores for quick access to data.

- L2 Cache: Mid-level cache that stores larger data than L1 but with slightly longer access times.

- L3 Cache: More substantial cache shared among multiple cores for storing a vast amount of data.

Cache Coherency

Cache coherency ensures data consistency across different cache levels by maintaining a synchronized view of memory.

- When a core updates data in one cache level, it informs other caches to reflect the changes (coherency).

- Coherency protocols help in minimizing conflicts and ensuring data integrity across the cache hierarchy.

Benefits Of Gpu Cache

GPU cache plays a crucial role in enhancing the performance of graphic processing units. Its implementation offers several benefits that significantly improve the efficiency and speed of data processing for a wide range of applications, including gaming, video editing, and scientific simulations.

Faster Data Access

GPU cache facilitates faster data access by storing frequently accessed data closer to the processing units, reducing the need to fetch information from the main memory repeatedly. This proximity allows the GPU to retrieve data more quickly, resulting in faster rendering and computations.

Reduced Memory Congestion

By caching data directly on the GPU, it helps in reducing the congestion on the main memory, which can become overwhelmed when handling large datasets. As a result, the GPU can efficiently manage its memory resources, leading to smoother operation and improved overall performance.

Improved Performance

The utilization of GPU cache leads to a noticeable performance boost as it minimizes the latency associated with fetching data from the system memory. This expedited access to resources empowers the GPU to execute tasks more efficiently, resulting in enhanced frame rates, quicker data processing, and better responsiveness in applications.

Cache Miss And Cache Hit

When it comes to understanding the functionality of GPU cache, two important terms to be familiar with are “Cache Miss” and “Cache Hit.” These terms play a crucial role in determining the efficiency and performance of a graphics processing unit (GPU).

Definition Of Cache Miss

Cache Miss refers to a situation when a GPU attempts to access data that is not currently stored in its cache memory. In this scenario, the GPU needs to fetch the required data from a higher-level memory such as system memory or video RAM. This process of accessing data from a slower memory source leads to increased latency and can impact the overall performance of the GPU.

When a Cache Miss occurs, the GPU has to spend additional time retrieving the data which reduces the efficiency and speed of processing. It is important to minimize Cache Misses as they have a direct impact on the performance of the GPU.

Definition Of Cache Hit

On the other hand, Cache Hit refers to the situation when the GPU successfully retrieves the required data from its cache memory. When the GPU accesses data that is already cached, it eliminates the need to fetch the data from a slower memory source. This results in faster access times and improved performance of the GPU.

When a Cache Hit occurs, the GPU can quickly retrieve the required data, speeding up the processing and reducing latency. The more Cache Hits that occur, the better the overall performance of the GPU will be.

Effects On Performance

The occurrence of Cache Misses versus Cache Hits directly impacts the performance of a GPU. When there are more Cache Misses, the GPU experiences increased latency and slower processing speeds, leading to reduced performance. On the other hand, a higher number of Cache Hits results in faster access times, reduced latency, and improved performance.

To optimize the performance of a GPU, developers focus on minimizing the number of Cache Misses and maximizing the number of Cache Hits. This can be achieved through various techniques such as data prefetching, optimizing memory access patterns, and utilizing efficient caching algorithms.

Cache Optimization Techniques

The performance of a GPU (Graphics Processing Unit) relies heavily on cache optimization techniques. These techniques aim to improve the efficiency of data access and reduce memory latency, ultimately enhancing the overall performance of the GPU. In this article, we will explore three key cache optimization techniques: Spatial Locality, Temporal Locality, and Data Prefetching.

Spatial Locality

Spatial locality refers to the tendency of programs to access data that is close to the data they have recently accessed. In the context of GPUs, this means that when a thread accesses a memory location, it is likely to access nearby memory locations in the near future.

This behavior allows GPUs to optimize data fetching by bringing in a block of data that is located near the requested data into the cache. By doing so, the GPU can minimize the number of cache misses and reduce the time spent waiting for data to be fetched from the main memory.

Temporal Locality

Temporal locality is the principle that programs tend to access the same data multiple times within a short time frame. This means that if a thread accesses a specific memory location, it is likely to access the same location again soon.

To make use of temporal locality, GPUs employ caching techniques to keep recently accessed data in the cache for faster retrieval. This minimizes the need to fetch the same data repeatedly from the main memory, reducing memory latency and improving overall performance.

Data Prefetching

Data prefetching is a technique used to anticipate memory access patterns and fetch data into the cache before it is actually needed. By predicting the data that will be accessed next, the GPU can reduce the time spent waiting for data to be fetched from the main memory.

This technique involves analyzing the program’s memory access patterns and fetching the data into the cache proactively. By prefetching the data, the GPU reduces the latency associated with memory access, improving performance and efficiency.

Common Cache Related Issues

Cache Thrashing

Cache thrashing occurs when the cache is constantly being invalidated and reloaded with new data due to frequent conflicts or excessive context switching. This can lead to a significant decrease in performance, as the GPU spends more time handling cache misses and evictions than actually processing data.

Cache Pollution

Cache pollution happens when the cache is filled with unnecessary or unimportant data, causing useful data to be flushed out. This can result in a higher cache miss rate, impacting the efficiency of the GPU and leading to slower processing speeds.

Cache Invalidation

Cache invalidation occurs when the cached data becomes outdated or no longer valid, resulting in unnecessary cache misses. The GPU continues to access and process stale data, leading to inefficiencies and potentially incorrect results.

Credit: www.rastergrid.com

Latest Advancements In Gpu Cache Technology

Latest advancements in GPU cache technology have brought significant improvements in performance and efficiency. Cutting-edge developments in unified cache architecture and smart cache management have revolutionized how GPUs handle data processing.

Unified Cache Architecture

Unified Cache Architecture: Combines multiple cache levels for faster data access.

Smart Cache Management

Smart Cache Management: AI-driven algorithms optimize cache usage for enhanced performance.

Future Trends In Gpu Cache

Frequently Asked Questions Of What Is Gpu Cache

What Is Gpu Cache?

GPU cache is a high-speed memory that stores frequently accessed data to reduce the latency between the GPU and the main memory. It acts as a temporary storage for data, instructions, and previous calculations, allowing the GPU to quickly retrieve information and improve overall performance.

The GPU cache helps to minimize memory access time and optimize computing tasks.

How Does Gpu Cache Work?

GPU cache works by storing frequently used data close to the processor, reducing the time it takes to access information from the main memory. When the GPU needs data, it checks the cache first. If the data is found in the cache, it is retrieved quickly.

If not, the GPU fetches the required data from the main memory and stores a copy in the cache. This caching process allows for faster data retrieval and processing, improving GPU performance.

Why Is Gpu Cache Important?

GPU cache is important because it greatly speeds up data access, reducing the time it takes for the GPU to perform calculations. By storing frequently accessed data in a cache, the GPU can quickly retrieve information instead of accessing the main memory, which is relatively slower.

This results in improved graphics rendering, faster processing times, and overall better performance in GPU-intensive tasks such as gaming, video editing, and 3D rendering.

Does Gpu Cache Affect Gaming Performance?

Yes, GPU cache has a significant impact on gaming performance. The cache stores frequently used data, such as textures, shaders, and geometry, which are crucial for rendering high-quality graphics in games. By efficiently caching this data, the GPU can quickly access and process it, resulting in smoother gameplay, reduced latency, and improved frame rates.

A larger and more efficient GPU cache can enhance gaming performance, especially in demanding and graphically intensive games.

Conclusion

In essence, GPU cache plays a significant role in boosting the overall performance of your graphics processing unit. By storing frequently accessed data, it minimizes the need to retrieve information from system memory, thereby enhancing speed and efficiency. Understanding GPU cache is crucial for optimizing gaming and graphics-heavy tasks, ensuring a seamless and fluid experience.

0 comments